Quest hand tracking is perfect for slower experiences with light interaction.

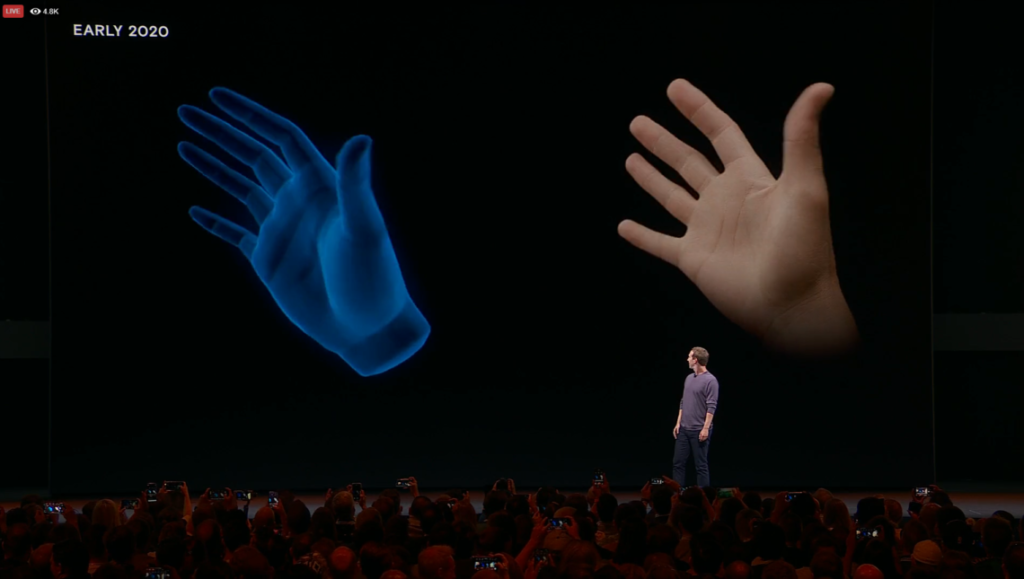

During Oculus Connect 6 in San Jose, CA, Mark Zuckerberg unveiled that the Oculus Quest would allow for hand and finger tracking without the need of any hand controllers, and of course, I couldn’t wait to try it. I was excited to see how this would change current and future immersive experiences and possibly push VR even closer to mass adoption.

In the end, I was not disappointed. Hand-tracking on the Quest without the need for any type of additional hardware shows a lot of promise for the future of the Quest and VR as a whole. It’s not perfect, but it is definitely a step in the right direction.

Hand tracking initially started off as an experimental research project at Facebook Reality Labs. Their computer vision team came up with a new method of using deep learning to understand the position of your fingers using just the monochrome cameras featured on the Quest, with no active depth-sensing cameras, additional sensors, or extra processors required. The technology approximates the shape of your hand and creates a set of 3D points to accurately represent your hand and finger movement in VR.

Basically, the Quest is using its built-in depth sensors and cameras to track each knuckle and the surface of your hands and then uses that data to mirror those movements in your VR environment.

To demonstrate how this technology works, Facebook offered a hands-on demo for attendees to try out using their VR game Elixir at the Oculus Connect 6 demo area.

The moment you put on the Quest headset, your hands are instantly in front of you. I was impressed at how quickly the Quest was able to calibrate my fingers and bring them to life inside the virtual environment. Your natural reaction is to just start moving your hands in every direction, waving them in front of you, clutching your fists, and spreading out your fingers just to see accurate the tracking really is.

The depth sensors worked really well reading the distance between your hands and your face; that’s actually how you interact in Elixir. The game places you inside an otherworldly laboratory littered with oozing green liquids, ghoulish sound effects, and strange wooden machines; an aesthetic similar to something you might see in a Harry Potter film. A voice guides you and encourages you to interact with various objects scattered throughout the lab. Attendees could poke and prod bubbles floating from a spooky cauldron, wave their hands over flaming candles, even dip their fingers into a strange goo and watch the color of their hands change color.

Of course, the first thing I did was poke the floating eyeball. I won’t spoil what happens when you do, but let’s just say this is one item you shouldn’t agitate.

Another impressive element I noticed was how accurate the tracking was outside of the established field of view. When I stretched my hands out beyond the FOV of the headset and brought them back into peripheral vision, my virtual hands reentered the FOV exactly where they had left. No sudden reappearance or lag; instead, a seamless transition, keeping you immersed in the experience no matter what the position.

That being said, I did find that really quick movements caused the headset to lose hand tracking fairly easily. There would either be some latency issues with the fingers and hands, or the headset would just lose track altogether, causing the VR hands to flip or spin backward as the headset attempted to recalibrate.

Though it only took a few seconds to recalibrate, it was enough to break the immersion for me, and I found it a little frustrating.

So maybe hand-tracking might not be the best for fast-paced games such as Beat Saber or the much-anticipated Pistol Whip, but this could be a great way to interact with social VR experiences, such as the upcoming Facebook Horizon, AltSpaceVR, or various other VR experiences where fast movements aren’t required, like Richie’s Plank Experience or PokerStars VR.

As for actions such as pulling up menus or teleporting around virtual environments, it’s possible that hand gestures such as the ones used with the Microsoft HoloLens or Leap Motion could resolve this. There are also several existing VR experiences that feature their own unique gesture-activated systems, such as the popular mini-game social platform Rec Room, which allows users to pull up various menus and settings pages by holding up their hands and looking at a virtual watch.

Of course, the Facebook team has more-than-likely already accounted for this. If you’ve ever used Facebook’s soon-to-be-defunct Facebook Spaces platform, you know that users are able to pull up a small menu by flipping their hands over and touching their wrists. This passive haptic experience could easily show up in the Quest — which, by the way, could hypothetically use your arm as a way to bring in additional controller options through tapping.

I’m really excited about hand tracking on the Quest and all of the possibilities it will open up. In the end, however, I actually found it a little uncomfortable not having a controller in my hands to manipulate objects in the virtual environment, and I found myself missing haptic feedback when I touched an object.

Like anything else, it’s a feature you will need to get used too. It’s a strange feeling not having any feeling when touching or grabbing things, something dedicated Leap Motion and HoloLens users are already used too.

Don’t get me wrong, I’ve always thought that VR experiences should require less hardware, but now that we are here, I’m thinking that maybe we do need the controller. Not for every experience, but definitely for some.

I do recognize the potential of hand tracking and I can see this playing a really big role in how we handle everything from job training, education, entertainment, socializing, and much more.

Navah Berg, a social VR influencer who was in attendance at Oculus Connect 6 told VRScout, “Hand tracking is almost like taking your training wheels off,” Berg continues, “removing controllers makes the experience so much more real because moving freely without equipment in your hands gives you the feeling of freedom and ultimate reality.”

Throwing on just a headset is simply an easier and more convenient experience, and as they’ve evolved with their hardware, Facebook has positioned itself as a company fully behind VR and AR. The Oculus Quest is definitely positioning itself as a headset for the masses, one that could finally lead to the global-adoption of VR technology.

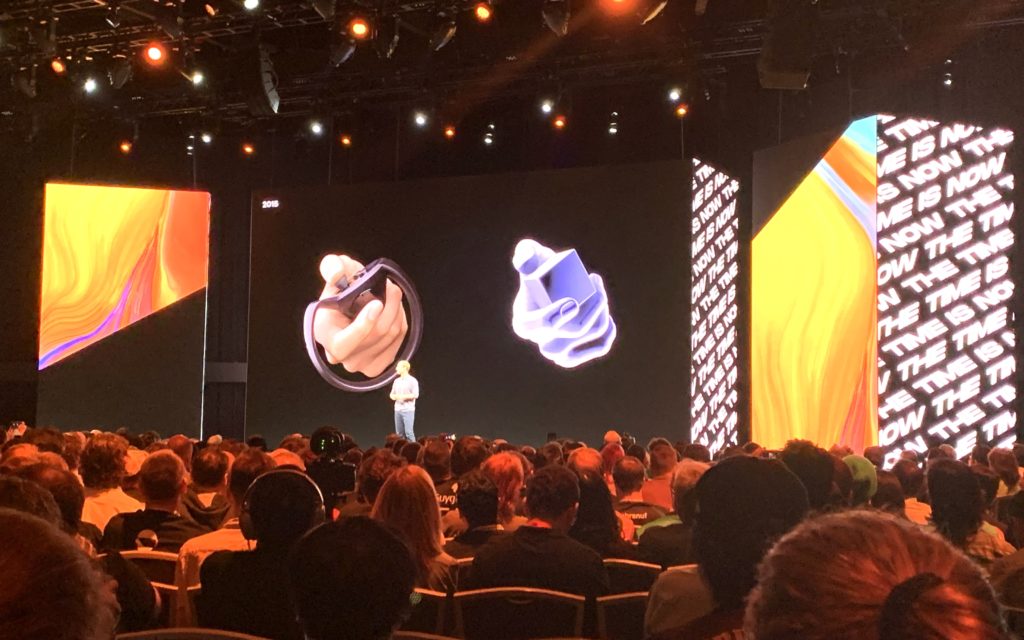

We’ve come a long way since Oculus’ consumer product. When the Oculus Rift first shipped out, they didn’t come with motion controls — just an Xbox One game controller. It was only months later that Rift owners could bring their hands into their VR experiences via the Oculus Touch.

Just six months ago, engaging with a VR experience meant obtaining a headset, sensors, hand controllers, and an expensive, gaming-level PC. Now all you need is a single headset and enough space that you won’t kick your pets. That’s a pretty big leap for VR, and hand tracking pushes us even further. In its current state, however, it needs more tweaking. But we’re getting there. Oh, we’re getting there.

Hand tracking arrives as an experimental feature on the Oculus Quest in early 2020.

Feature Image Credit: Facebook

The post Hands-On: Oculus Quest Hand Tracking Feels Great, But It’s Not Perfect appeared first on VRScout.

from VRScout https://ift.tt/2p5asMY

via IFTTT

No comments:

Post a Comment