At SIGGRAPH 2023 I tried a Meta research prototype with near-retinal angular resolution, dynamic focus, and dynamic distortion correction.

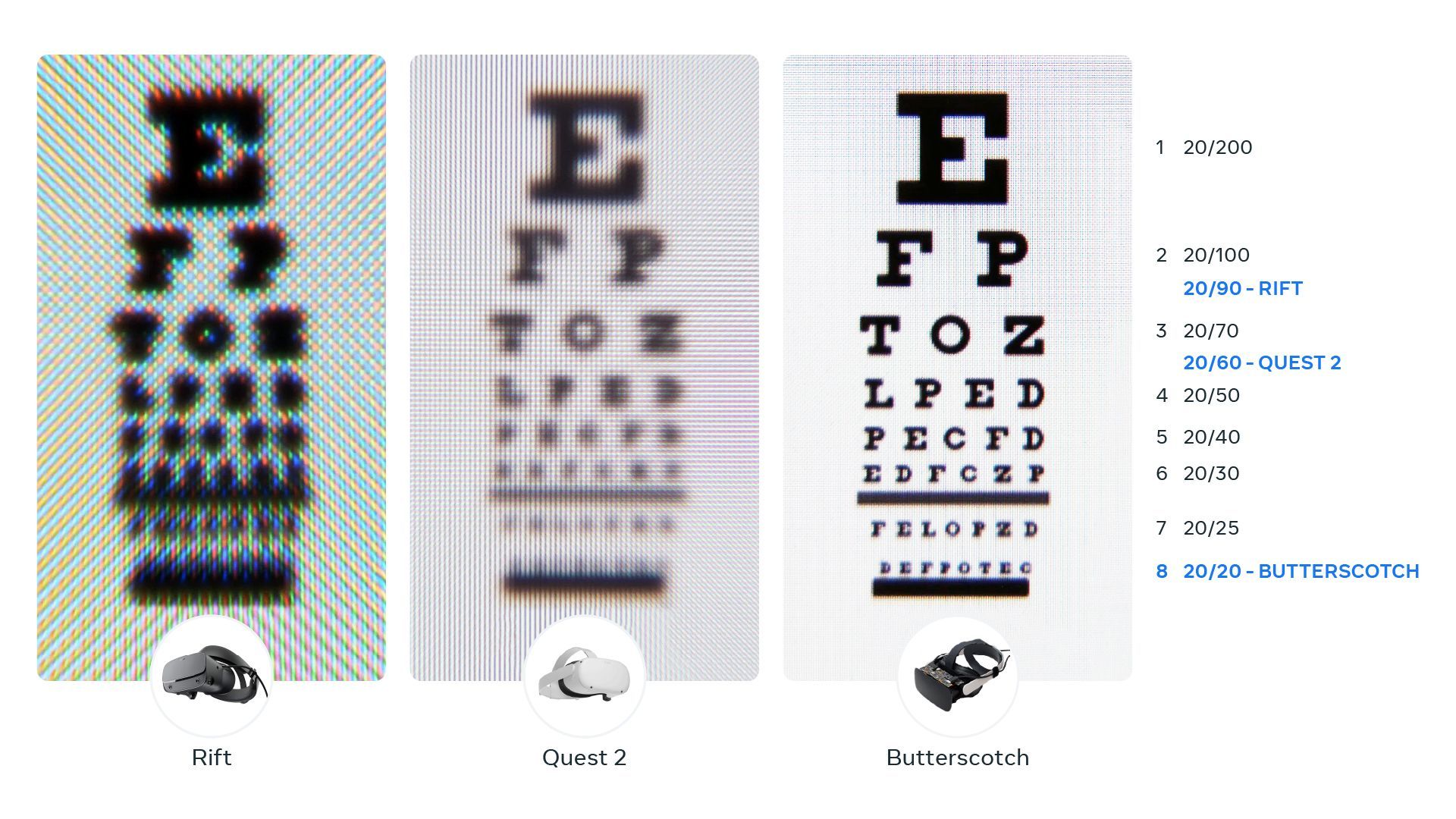

Butterscotch Varifocal is based on the original Butterscotch prototype Meta revealed last year. The Butterscotch prototypes deliver an angular resolution of 56 pixels per degree (PPD) of your vision, just short of the 60 pixels per degree generally accepted to be what the human eye can discern. That's almost three times the central angular resolution of Quest Pro, and double that of Bigscreen Beyond.

Meta's researchers didn't achieve this angular resolution with any breakthrough or specialized technology, however. The Butterscotch headsets use off-the-shelf 2880x2880 LCD displays that would deliver around 30 PPD in a typical VR headset, but pair them with lenses with around half the field of view. The purpose here is to demonstrate what retinal resolution feels like to eventually inform future product priority and tradeoff decisions. The prototype is not meant to propose any specific new technology for achieving retinal resolution at an acceptable field of view.

Varjo achieves retinal resolution in an even smaller field of view in the very center of the view in its existing $5000+ business headsets and then combines it with a much lower angular resolution peripheral display.

As the name suggests though, Butterscotch Varifocal isn't just a demonstration of retinal resolution. It also includes the varifocal technology from Meta's 2018 Half-Dome prototype.

In VR each eye gets a separate perspective so you get stereo disparity, but that's just one cue your brain uses to determine depth. All current headsets on the market have fixed focus lenses. The image is focused at a fixed distance, usually a few meters. Your eyes will point (converge or diverge) toward virtual objects, but can't actually focus (accommodate) to the virtual distance to them. This is called the vergence-accommodation conflict, and it causes eye strain and can make virtual objects look blurry close up.

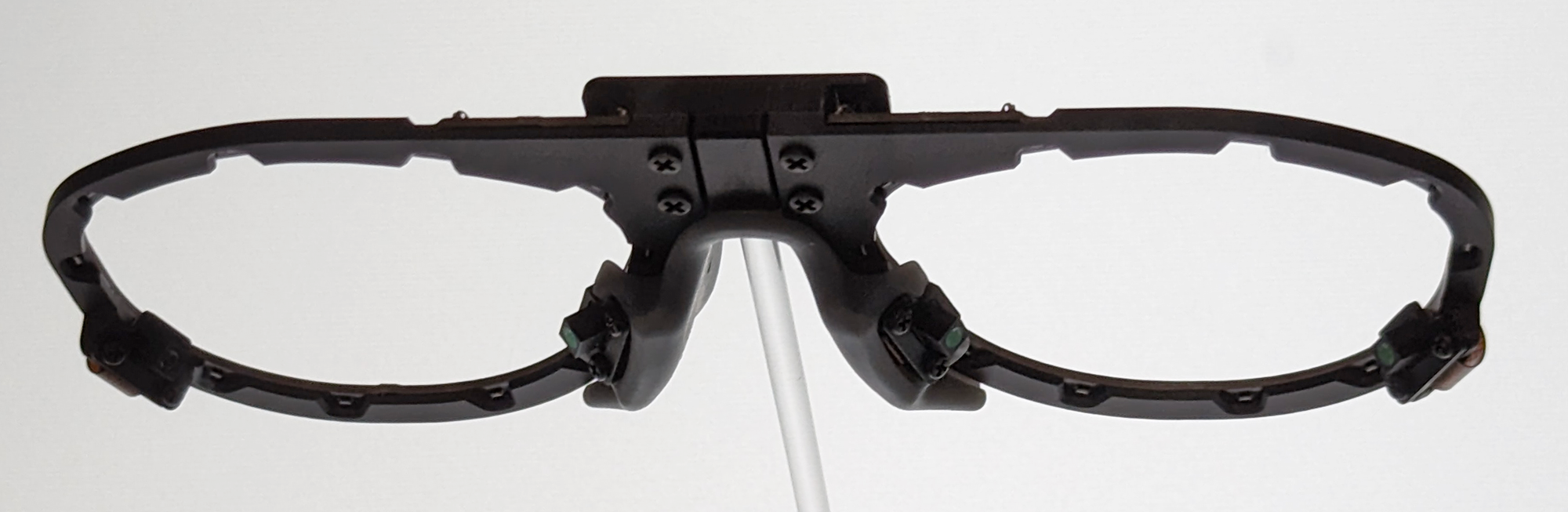

The Half-Dome prototype presented a solution: track where your eyes are pointing and rapidly mechanically move the display panels backward or forward to dynamically adjust focus. Butterscotch Varifocal includes the same eye tracking and mechanical actuators to do the same.

The result of this combination of near-retinal angular resolution and dynamic focus adjustment was a view into a virtual world with no visible pixelation or aliasing, where I could make out even the tiniest details in the smallest objects, and read text of any size I could read in the physical world. The virtual tablet and phones displaying articles with small text didn't even need to use the compositor layers John Carmack repeatedly tells developers are essential with current headsets. The pixel density here was just so high that tricks like that are no longer needed.

The limiting factor for making out fine details was now my eyesight, not the headset's display system. It's the opposite situation to VR headsets today and it was a tantalizing look at the visual quality consumer VR will one day offer.

This was my first time trying a varifocal headset - very few people outside Meta have - and I was able to toggle it on and off with a button press. When toggled on, virtual objects even remained sharp when brought incredibly close to my eyes, down to a minimum of 20 centimeters. That has practical advantages, but there was something else more subtle I noticed when varifocal was on. The focus being correct made the virtual world and the objects within it suddenly look and feel more "real". In fact, some of the demo objects were so detailed I'd go as far to say they felt entirely real.

I asked Meta's Display Systems Research Director Douglas Lanman about this. He told me that while this effect was something Meta researchers are aware of and discuss, the subjective feeling of visual realness is a lot harder to quantify and assess than other aspects of displays.

There was a small latency between looking at an object and the displays moving to adjust focus, but as with the retinal resolution aspect, the purpose of this prototype is to showcase what varifocal feels like, not to claim a specific technology for practically delivering it. Butterscotch Varifocal actually uses the original Half-Dome actuators, and in late 2019 Meta revealed Half-Dome 2 with faster more reliable actuators, and Half-Dome 3 with no moving parts at all, changing focus with tunable lens layers instead.

Dynamic Distortion Correction

There's also a third technology included in Butterscotch Varifocal that didn't get as much attention but is vital to visually convincing VR too: dynamic distortion correction.

As well as the impressive angular resolution and dynamic focus, I had also noticed Butterscotch Varifocal delivered excellent optical fundamentals, with no pupil swim or other geometric distortion. After the demo, I discovered this was because it had dynamic distortion correction.

Modern VR headset lenses magnify a display to a relatively wide field of view, but this results in geometric barrel distortion. One of the key innovations of Palmer Luckey's original prototypes was to correct this in software by outputting an image with the inverse distortion to the display. However, the optical distortion slightly changes based on the position of your eye relative to the lens, and the software distortion correction is only designed for dead center. Dynamic distortion correction means the system generates a new correction each frame based on the position of your eye, enabled by eye tracking.

I was told this is a computationally inexpensive technique, so I asked Lanman why it isn't used in Quest Pro, since it too has eye tracking. While he didn't directly answer that, he did talk about the importance of the eye tracking hardware being good enough for dynamic distortion correction to work well because it needs to measure the exact position of your pupil in 3D space, not just the gaze direction. Notably, Quest Pro has just one tracking camera pointed at each eye while Butterscotch Varifocal has two, just like Apple Vision Pro.

How Far Away Is Retinal & Varifocal?

Last year Meta described retinal resolution as "on our product roadmap", so how long until we see any of this in actual products?

Lanman wouldn't answer of course, as he's "just a researcher", but he did express skepticism toward the complexity of multi-display approaches like Varjo's. Assuming retinal resolution will arrive through raw pixel density, achieving it in the center view of headsets with standard field of view would require roughly 5K per eye displays. As field of view widens that requirement will get even higher though - more than 10K per eye for full human field of view, and up to 16K per eye for retinal resolution across the entire view instead of just in the center.

Apple Vision Pro is set to arrive next year with around 3.5K per eye OLED microdisplays, though they are reportedly extremely difficult to manufacture, with low yield and thus a key contributor to its $3500 price. Still, the market demand for such high resolution microdisplays is only now emerging, and as display companies compete to figure out better techniques to manufacture them, it's reasonable to expect prices to come down, output to increase, and resolution to get even higher over time.

But what about varifocal? In an early 2020 talk Lanman described Half-Dome 3's electronic varifocal approach as "almost ready for prime time", at a higher "Technology Readiness Level" than any previous prototype. Last year Mark Zuckerberg suggested varifocal could arrive “in the second half of the decade”, meaning somewhere between 2026 and 2029.

Final Thoughts

When revealing the original Butterscotch and Starburst (which we tried) last year, Lanman described his team's goal as one day delivering a display system that passes a "visual turing test", meaning it feels as if you're looking through a transparent glass visor, not a display at all.

Butterscotch Varifocal wouldn't fully pass such a test. Unlike Starburst, its conventional LCD displays don't come close to delivering the brightness, dynamic range, or contrast of the real world. But it can deliver the detail and sharpness, and that alone was stunning to behold. It's yet another reminder that headsets today are still just the beginning of VR, and it has a long and promising path ahead.

from UploadVR https://ift.tt/NyRvX3W

via IFTTT

No comments:

Post a Comment