Researchers from Intel’s Intelligent Systems Lab have revealed a new method for enhancing computer-generated imagery with photorealistic graphics. Demonstrated with GTA V, the approach uses deep-learning to analyze frames generated by the game and then generate new frames from a dataset of real images. While the technique in its research state is too slow for real gameplay today, it could represent a fundamentally new direction for real-time computer graphics of the future.

Despite being released back in 2013, GTA V remains a pretty darn good looking game. Even so, it’s far from what would truly fit the definition of “photorealistic.”

Although we’ve been able to create pre-rendered truly photorealistic imagery for quite some time now, doing so in real-time is still a major challenge. While real-time raytracing takes us another step toward realistic graphics, there’s still a gap between even the best looking games today and true photorealism.

Researchers from Intel’s Intelligent Systems Lab have published research demonstrating a state of the art approach to creating truly photorealistic real-time graphics by layering a deep-learning system on top of GTA V’s existing rendering engine. The results are quite impressive, showing stability that far exceeds similar methods.

In concept, the method is similar to NVIDIA’s Deep Learning Super Sampling (DLSS). But while DLSS is designed to ingest an image and then generate a sharper version of the same image, the method from the Intelligent Systems Lab ingests an image and then enhances its photorealism by drawing from a dataset of real life imagery—specifically a dataset called Cityscapes which features street view imagery from the perspective of a car. The method creates an entirely new frame by extracting features from the dataset which best match what’s shown in the frame originally generated by the GTA V game engine.

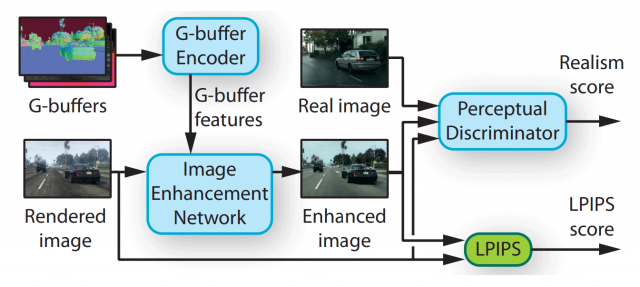

This ‘style transfer’ approach isn’t entirely new, but what is new with this approach is the integration of G-buffer data—created by the game engine—as part of the image synthesis process.

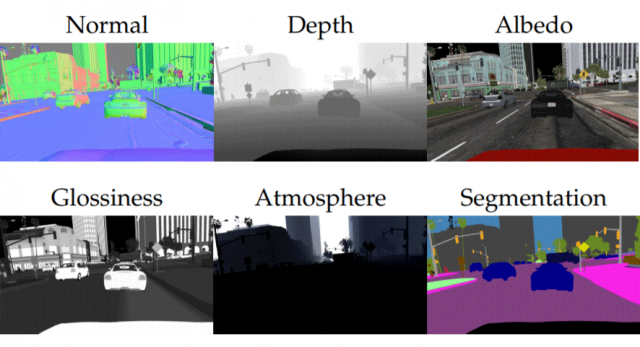

A G-buffer is a representation of each game frame which includes information like depth, albedo, normal maps, and object segmentation, all of which is used in the game engine’s normal rendering process. Rather than looking only at the final frame rendered by the game engine, the method from the Intelligent Systems Lab looks at all of the extra data available in the G-buffer to make better guesses about which parts of its photorealistic dataset it should draw from in order to create an accurate representation of the scene.

This approach is what gives the method its great temporal stability (moving objects look geometrically consistent from one frame to the next) and semantic consistency (objects in the newly generated frame correctly represent what was in the original frame). The researchers compared their method to other approaches, many of which struggled with those two points in particular.

– – — – –

The method currently runs at what the researchers—Stephan R. Richter, Hassan Abu AlHaija, and Vladlen Koltun—call “interactive rates,” it’s still too slow today to make for practical use in a videogame (hitting just 2 FPS using an Nvidia RTX 3090 GPU). In the future however, the researchers believe that the method could be optimized to work in tandem with a game engine (instead of on top of it), which could speed the process up to practically useful rates—perhaps one day bringing truly photorealistic graphics to VR.

“Our method integrates learning-based approaches with conventional real-time rendering pipelines. We expect our method to continue to benefit future graphics pipelines and to be compatible with real-time ray tracing,” the researchers conclude. […] “Since G-buffers that are used as input are produced natively on the GPU, our method could be integrated more deeply into game engines, increasing efficiency and possibly further advancing the level of realism.”

The post Intel Researchers Give ‘GTA V’ Photorealistic Graphics, Similar Techniques Could Do the Same for VR appeared first on Road to VR.

from Road to VR https://ift.tt/3okyyOo

via IFTTT

No comments:

Post a Comment