The latest version of the Oculus Integration for Unity, v23, adds experimental OpenXR support for Quest and Quest 2 application development. A new technique for reducing positional latency called ‘Phase Sync’ has been added to both the Unity and Unreal Engine 4 integrations; Oculus recommends that all Quest developers consider using it.

OpenXR Support for Oculus Unity Integration

OpenXR, the industry-backed standard that aims to streamline the development of XR applications, has made several major steps this year toward becoming production ready. Today Oculus released new development tools which add experimental OpenXR support for Quest and Quest 2 applications built with Unity.

OpenXR aims to allow developers to build a single application which is compatible with any OpenXR headset, rather than needing to build a different version of the application for each headset runtime.

While Unity is working on its own OpenXR support, the newly released v23 Oculus Integration for Unity adds support for an “OpenXR experimental plugin for Oculus Quest and Oculus Quest 2.” This should allow for the development of OpenXR applications based on the features provided by the Oculus Integration for Unity.

Earlier this year Oculus released OpenXR support for building native Quest and Rift applications as well.

Phase Sync Latency Reduction in Unity and Unreal Engine

The v23 Oculus Integration for Unity and for Unreal Engine 4 also bring new latency reduction tech called Phase Sync which can reduce positional tracking latency with ‘no performance overhead’, according to Oculus. The company recommends “every in-development app to enable [Phase Sync], especially if your app is latency sensitive (if it uses hand tracking, for example).”

While Quest has long used the Asynchronous Timewarp to reduce head-rotation latency by warping the rendered frame to the most recent rotational data just before it goes to the display, positional tracking doesn’t benefit from this technique.

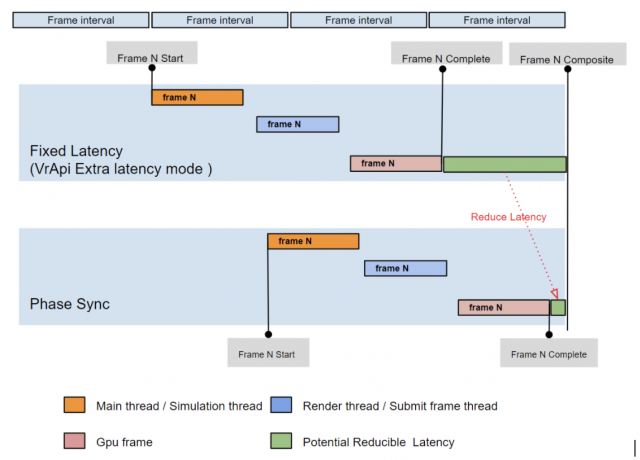

One way to reduce positional tracking latency is to minimize the amount of time between when a frame starts rendering and when it actually reaches the display. Ideally the frame will finish rendering just before being sent to the display; if it finishes early, all of the time between when the frame is finished and when it is sent to the display becomes added positional latency.

Phase Sync introduces dynamic frame timing which adjusts on the fly to make sure frames are being completed in an optimal way for latency reduction.

Unlike the Oculus PC SDK, the Oculus Mobile SDK has been using fixed-latency mode to manage frame timing since its inception. The philosophy behind fixed-latency mode is to finish everything as early as possible to avoid stale frames. It achieves this goal well, but with our release of Quest 2, which has significantly more CPU and GPU compute than our original Quest, a lot of apps can finish rendering their frames earlier than planned. As a result, we tend to see more “early frames” […]

Compared with fixed-latency mode, Phase Sync handles frame timing adaptively according to the app’s workload. The aim is to have the frame finish rendering right before our compositor needs the completed frame, so it can save as much latency as possible, and also not missing any frames. The difference between Phase Sync and fixed-latency mode can be illustrated in the following graph on a typical multi-threaded VR app.

Luckily, turning on Phase Sync is as easy as checking a box with the v23 Unity and Unreal Engine integrations from Oculus (details here).

The post Oculus Unity Plugin Adds Experimental OpenXR Support & Latency-reducing Tech for Quest appeared first on Road to VR.

from Road to VR https://ift.tt/3m1MzgW

via IFTTT

No comments:

Post a Comment