Snap Inc. goes all-in on AR with improvements to Lens Studio and the Snapchat app.

This week Snap Inc. held its annual developer event where it revealed a new camera and a plethora of updates to both Lens Studio and the Snapchat app, further expanding the platforms existing catalog of groundbreaking AR content currently being accessed by over 170 million users on a daily basis.

This includes voice-activate UI navigation, shared AR Lenses capable of spanning multiple city blocks, new scanners capable of identifying dog and plant breeds, and machine learning functionality just to name a few.

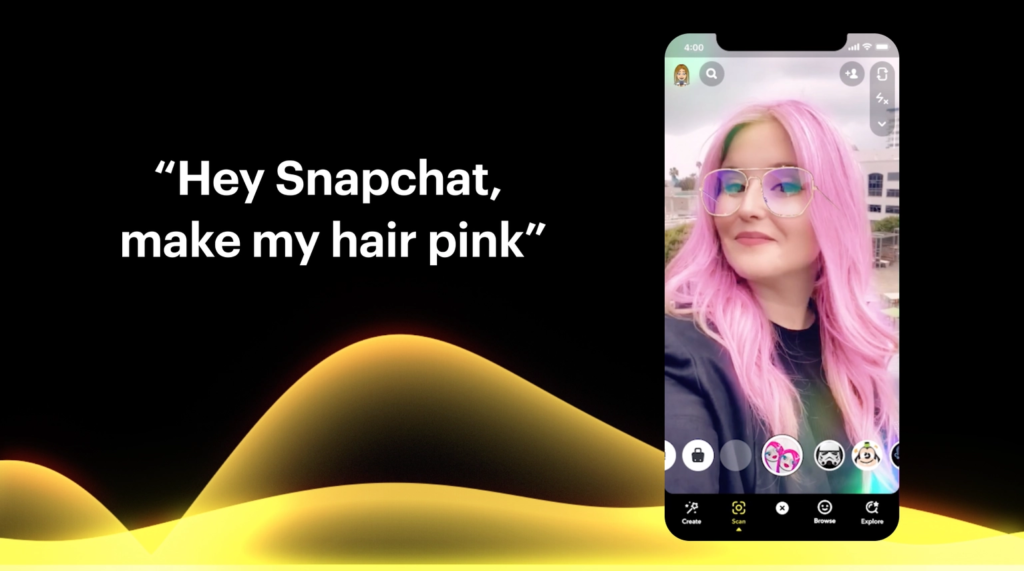

Voice-Activated Lenses

As Snapchat continues to introduce AR content to its platform, locating specific Lenses among the seemingly-endless catalog of both professional and user-related creations has become increasingly difficult. With the introduction of Voice Scan, you can now tell the app exactly which Lens you’re searching for.

Simply press and hold the camera select screen and speak into your smartphones mic. Snapchat will then pull up any closely-related results based on your verbal description.

Local Lenses

While not fully available to the public just yet, yesterday Snap showcased a preview of its new Local Lenses, shared AR Lenses capable of spanning entire city blocks.

Existing as persistent digital worlds, Local Lenses will allow you to collaborate with other Snapchatters on massive AR projects built right on top of your neighborhood, such as decorating local buildings with colorful augmented paint.

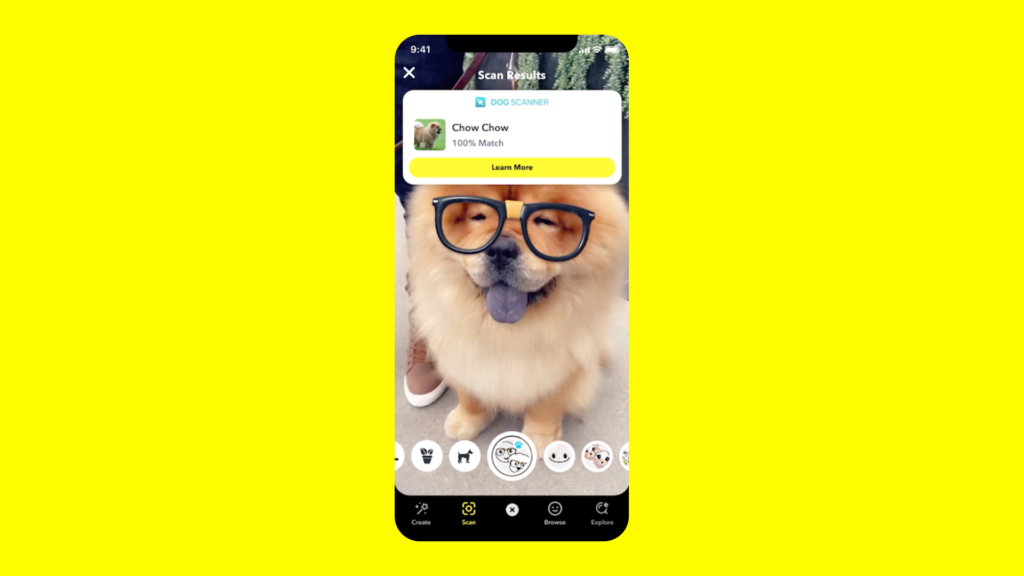

Scan

Originally introduced back in 2019, Snapchat’s AR utility platform “Scan” uses the Snapchat camera to identify real-world objects and access relevant information. By partnering with specific companies, Snap has been able to dramatically expand the functionality of Scan, whether it be identifying specific music via Shazam or solving math problems with Photomath.

This week Snap announced two new Scan partners: PlantSnap and Dog Scanner. Available now, PlantSnap allows Snapchatters to use their Snap camera to identify a whopping 90% of all known plants and trees. Similar to PlantSnap, Dog Scanner uses the Snapchat camera to recognize over 400 breeds of canine. Additional upcoming partners include Nutrition Scanner, which rates the quality of ingredients in packaged food, as well as several brand partnerships, including Louis Vuitton.

Lens Studio & SnapML

In addition to the aforementioned updates to the Snapchat app, Snap’s Lens Studio creator tool also received some love in the form of SnapML, an exciting new feature that allows creators to import custom machine learning models into their projects, further expanding the capabilities of Snapchat’s AR Lenses. Snap has already announced partnerships with Wannaby, Prisma, CV2020, and numerous other Official Lens Creators dedicated towards the ongoing development of SnapML.

Additional updates to Lens Studio include improved facial tracking via Face Landmarks and Face Expressions, Hand Gesture templates, a simplified user interface, and a new Foot Tracking template designed by Wannaby using SnapML which allows for AR feet interactions.

Snapchat is available free on up-to-date iOS and Android devices. Lens Studio can also be downloaded free via Windows or Mac.

Image Credit: Snap Inc.

The post Snapchat AR Lenses Update Adds Voice Commands, Shared Worlds, Dog & Plant Scanner appeared first on VRScout.

from VRScout https://ift.tt/37r3HqR

via IFTTT

No comments:

Post a Comment